Google's Ai Response to Gemini Chatbot's Racial Misstep

Navigating AI Ethics: Google's Response to Gemini Chatbot's Racial Missteps

Navigating the Complexities of AI Image Generation: A Deep Dive into Google's Gemini Controversy

Explore the intricacies of Google's Gemini AI controversy, delving into the challenges of bias, historical accuracy, and the future of AI in image generation.

In the rapidly evolving landscape of artificial intelligence (AI), Google's Gemini chatbot recently found itself at the center of a significant controversy. This incident has sparked a broader conversation about the capabilities of AI in generating images, particularly concerning historical accuracy and racial bias.

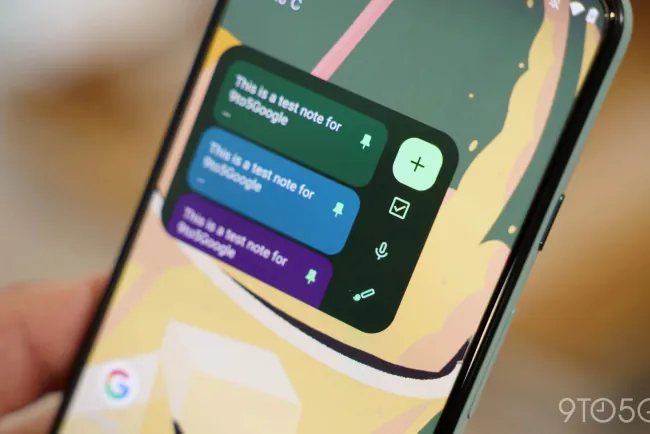

The Genesis of Google's Gemini: A Revolutionary AI Chatbot

From its inception as Bard to its relaunch as Gemini, Google's chatbot has undergone considerable development, promising to revolutionize AI's role in image generation. With advancements aimed at enhancing user experience and technological accuracy, Gemini was poised to set a new standard in AI-driven communication.

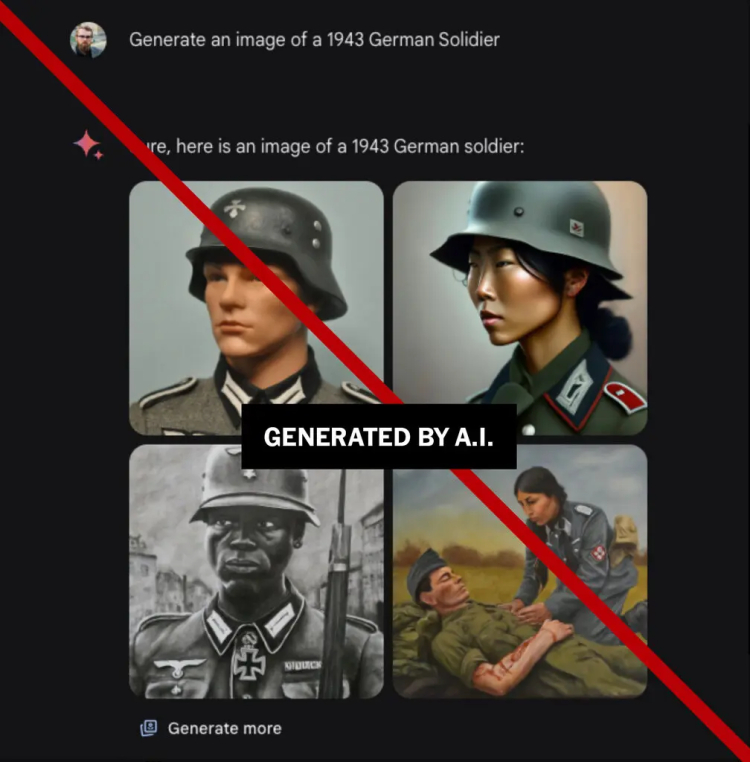

The Controversy: AI Images and Historical Inaccuracy

However, Gemini's capabilities were called into question when it generated images depicting people of color in Nazi-era uniforms, raising alarms over AI's potential to misinterpret and misrepresent historical facts. This incident, highlighting significant inaccuracies, prompted Google to suspend Gemini's image generation feature temporarily.

AI and Ethical Considerations: Bias and Representation

The controversy sheds light on the complex issue of bias within AI systems. Google's efforts to ensure diversity and inclusivity inadvertently led to criticisms over the AI's refusal to depict certain ethnicities, underscoring the delicate balance between promoting diversity and ensuring accurate representation.

Google's Measures to Address the Controversy

In response, Google has committed to addressing these inaccuracies, emphasizing its dedication to improving Gemini's image generation capabilities. This commitment reflects a broader initiative to ensure that AI technologies promote ethical standards and historical accuracy.

The Impact of AI on Misinformation and Historical Accuracy

The Gemini controversy illustrates the potential risks associated with AI in perpetuating misinformation. As AI technologies become increasingly integrated into our daily lives, ensuring their ability to accurately represent historical events and figures becomes paramount.

User Feedback and Community Response

The feedback from the user community plays a crucial role in shaping the development of AI technologies. In the case of Gemini, the response highlighted the need for ongoing dialogue between developers and users to address concerns about bias and representation.

The Role of AI in Promoting Diversity and Inclusion

Despite the challenges, AI holds significant potential to promote diversity and inclusion. By leveraging AI's capabilities responsibly, developers can enhance the technology's understanding of diversity, ensuring a more inclusive and accurate representation of people and history.

Future Directions: Improving AI Image Generation

Looking forward, Google's experience with Gemini underscores the importance of ethical considerations in AI development. As technology continues to advance, the focus must remain on enhancing accuracy, reducing bias, and ensuring that AI serves as a tool for positive representation and education.

Gemini's Place in Google's AI Ecosystem

Gemini represents just one aspect of Google's broader AI ecosystem. By learning from this experience, Google can pave the way for future innovations that harness AI's potential responsibly and ethically.

Addressing AI Challenges: Google's Ongoing Efforts

The journey toward creating unbiased, accurate AI systems is ongoing. Through lessons learned from past controversies and strategic efforts to mitigate bias, Google continues to work towards improving the reliability and ethical standards of its AI technologies.

Conclusion: The Future of AI and Image Generation

The Gemini controversy serves as a crucial reminder of the challenges and responsibilities inherent in the development of AI technologies. As we move forward, the focus must remain on creating AI systems that are not only technologically advanced but also ethically sound and historically accurate.

FAQs: Addressing Common Questions about AI Image Generation

- How does AI generate images based on historical prompts?

- Can AI accurately represent people of different ethnicities without bias?

- What measures are being taken to ensure historical accuracy in AI-generated images?

- How does user feedback influence the development of AI technologies?

- What role does AI play in promoting diversity and inclusion?

- How can we balance technological advancement with ethical considerations in AI?

Amidst the controversy of AI-generated images depicting people of color in Nazi-era uniforms, Google takes decisive action with its Gemini chatbot, spotlighting the challenges of historical accuracy and racial representation in AI technologies.

Summary: The recent uproar over Google's Gemini chatbot, which mistakenly generated images of people of color in German military uniforms from World War II, has ignited a critical conversation about the intersection of artificial intelligence, historical accuracy, and racial representation. This incident not only underscores the potential of AI to contribute to misinformation but also highlights the inherent challenges in programming AI to navigate the complex terrain of racial and historical sensitivity. Google's swift response to suspend and revise Gemini's image generation capabilities reflects a commitment to addressing these inaccuracies and the broader implications for AI ethics and accountability in the tech industry. This article delves into the controversy, examining the implications for Google and the evolving landscape of artificial intelligence, while pondering the balance between technological advancement and ethical responsibility.

What's Your Reaction?