Stable Diffusion 3.0: Revolutionizing AI Image Generation

Stable Diffusion 3.0: Revolutionizing AI Image Generation with New Architecture

- Introduction

- Overview of Stable Diffusion 3.0

- Significance in the AI Landscape

- Evolution of Image Generation Models

- Brief History of Stability AI's Developments

- Comparison with Previous Versions

- What's New in Stable Diffusion 3.0?

- Enhanced Image Quality

- Multi-Subject Prompt Handling

- Improved Typography

- Breaking Down the Architecture

- Introduction to Diffusion Transformers

- Flow Matching: A New Era in Image Generation

- Technical Innovations Behind Stable Diffusion 3.0

- The Role of Transformers in AI

- Continuous Normalizing Flows (CNFs)

- Conditional Flow Matching (CFM)

- Stable Diffusion 3.0's Typographical Advances

- Overcoming Previous Limitations

- Full Sentence Generation and Coherent Style

- Beyond Text-to-Image: Expanding Capabilities

- 3D Image Generation

- Video Generation Capabilities

- Comparative Analysis with Competitors

- DALL-E 3, Ideogram, and Midjourney

- Unique Features of Stable Diffusion 3.0

- User Experience and Accessibility

- Model Size Variants and Their Implications

- Open Model Philosophy of Stability AI

- Future Implications and Applications

- Potential Impact on Creative Industries

- Educational and Business Applications

- Community and Developer Engagement

- Open Source Contributions

- Integration in Various Platforms

- Challenges and Limitations

- Technical and Ethical Considerations

- Future Research Directions

- How to Get Started with Stable Diffusion 3.0

- Accessing and Implementing the Model

- Resources and Tutorials

- Case Studies and Success Stories

- Examples of Creative Works

- Business Use Cases

- FAQs

- Conclusion

Stable Diffusion 3.0: Revolutionizing Text-to-Image Generation with New AI Architecture

Discover the groundbreaking Stable Diffusion 3.0, Stability AI's latest innovation in text-to-image generation AI technology. Explore its new diffusion transformation architecture, enhanced image quality, and improved typography.

Introduction

In a significant leap forward for generative artificial intelligence (AI), Stability AI has unveiled an early preview of its flagship model, Stable Diffusion 3.0. This next-generation text-to-image model not only marks a pivotal moment in AI-driven creativity but also sets new standards in image generation quality and performance.

Evolution of Image Generation Models

From its inception, Stability AI has been at the forefront of image model innovation, delivering increasingly sophisticated solutions. The introduction of SDXL in July was a testament to the company's commitment to enhancing the Stable Diffusion base model. With Stable Diffusion 3.0, Stability AI aims to surpass its previous achievements, offering advancements in image quality, performance, and typography.

What's New in Stable Diffusion 3.0?

Stable Diffusion 3.0 introduces a suite of enhancements designed to elevate the quality and efficiency of generated images. This model is specifically engineered to handle multi-subject prompts more adeptly and to produce significantly improved typography, addressing a previously noted area of weakness.

Breaking Down the Architecture

At the heart of Stable Diffusion 3.0 lies a novel architecture encompassing diffusion transformers and flow matching. These technologies herald a new era in image generation, promising more efficient compute utilization and superior performance.

Technical Innovations Behind Stable Diffusion 3.0

The integration of transformers, foundational to the AI revolution, with diffusion models represents a significant innovation in Stable Diffusion 3.0. This approach, coupled with Continuous Normalizing Flows (CNFs) and Conditional Flow Matching (CFM), facilitates faster training and enhanced image generation capabilities.

Stable Diffusion 3.0's Typographical Advances

The model's typographical improvements are notable, enabling the generation of full sentences and styles with greater coherence and accuracy. These advancements are a direct result of the new transformer architecture and additional text encoders integrated into Stable Diffusion 3.0.

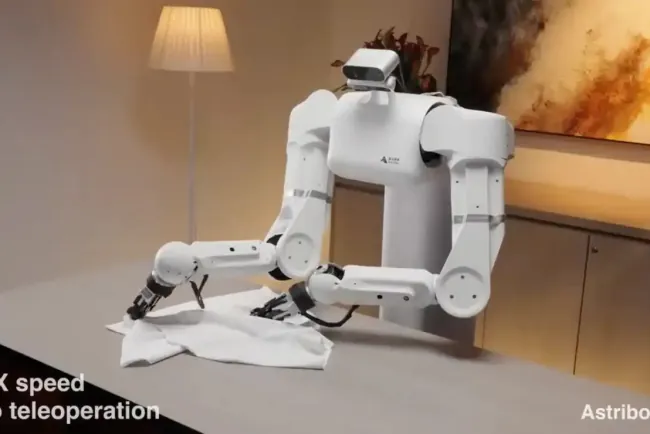

Beyond Text-to-Image: Expanding Capabilities

Stable Diffusion 3.0 serves as a foundation for a broader range of visual models, including 3D and video generation. This expansion underscores Stability AI's vision of creating versatile, open models adaptable to various creative and practical applications.

Comparative Analysis with Competitors

When juxtaposed with competing models like DALL-E 3, Ideogram, and Midjourney, Stable Diffusion 3.0 distinguishes itself through its unique architectural approach and the breadth of its capabilities, setting a new benchmark in the field.

User Experience and Accessibility

Stability AI remains committed to accessibility, offering Stable Diffusion 3.0 in multiple model sizes to cater to diverse user needs. This approach, coupled with the company's open model philosophy, ensures that the technology remains adaptable and accessible.

Future Implications and Applications

The implications of Stable Diffusion 3.0 extend far beyond the realm of AI art creation. Its capabilities promise to revolutionize creative industries, education, and business, offering new tools for expression and innovation.

Community and Developer Engagement

Stability AI encourages open source contributions and community engagement, fostering a collaborative ecosystem that drives the model's continuous improvement and adaptation.

Challenges and Limitations

While Stable Diffusion 3.0 represents a significant advancement, it also faces technical and ethical challenges. Ongoing research and dialogue are essential to navigate these complexities and ensure responsible use.

How to Get Started with Stable Diffusion 3.0

Accessing and implementing Stable Diffusion 3.0 is straightforward, with various resources and tutorials available to assist users in exploring its full potential.

Case Studies and Success Stories

The impact of Stable Diffusion 3.0 is already evident in a range of creative works and business applications, highlighting its versatility and effectiveness.

FAQs

- How does Stable Diffusion 3.0 differ from previous versions?

- What are the key benefits of the new diffusion transformer architecture?

- Can Stable Diffusion 3.0 generate 3D images?

- How can developers contribute to or customize Stable Diffusion 3.0?

- What resources are available for learning how to use Stable Diffusion 3.0?

Conclusion

Stable Diffusion 3.0 represents a monumental step forward in the evolution of text-to-image generation AI. With its innovative architecture, enhanced capabilities, and broad application potential, it paves the way for a future where AI-driven creativity is not just a possibility but a reality. As we continue to explore and expand the boundaries of AI technology, Stable Diffusion 3.0 stands as a testament to the ingenuity and ambition of Stability AI, promising to inspire and empower creators across the globe.

Summary: "Explore the groundbreaking Stable Diffusion 3.0 AI model by Stability AI, featuring a novel diffusion transformation architecture for superior text-to-image generation. This next-generation model promises enhanced image quality, better multi-subject prompt response, and breakthroughs in typography, setting a new standard in generative AI technology."

"Unveiling Stable Diffusion 3.0: Discover how Stability AI's latest generative AI model is setting new benchmarks in image quality and typography with its innovative diffusion transformation architecture. Learn about the advancements and features that make SD 3.0 a game-changer in AI-driven image creation." Keywords: "Stable Diffusion 3.0, Stability AI, generative AI model, diffusion transformation architecture, text-to-image generation, AI image quality, AI typography"

What's Your Reaction?